Intro

Adam: Hey, so before We get into it, why don’t you state your name and what you do?

Jason: My name is Jason Gauci. And yeah, I bring machine learning to billions of people.

Adam: Hello and welcome to CoRecursive, the stories behind the code, I’m Adam Gordon Bell. Jason has worked on YouTube recommendations. He was an early contributor to TensorFlow, the open source machine learning platform. His thesis work was cited by DeepMind. They were the people who beat all human players at Go, and that’s StarCraft, I think. And who knows what else?

If you ever wanted to learn about machine learning, you could do worse than have Jason teach you. But what I find so fascinating with Jason is he recognized this problem that was being solved the wrong way and set out to find a solution to it.

The problem was making recommendations, like on Amazon, people who bought this book might like that book. He didn’t exactly know how to solve the problem, but he knew it could be done better. So that’s the show today, Jason’s going to share his story, but you will eventually change the way Facebook works. And we’ll learn about reinforcement learning and neural nets, and just about the stress of pursuing research at a large company. It all started in 2006 when Jason was in grad school.

PHD Program

Jason: Yeah, so I went to college, picked computer science, and I remember my parents found out a little strange. They said, “Oh, you could be a doctor or a lawyer or something, you have the brains for it.” And then at one point my dad thought it was kind of like going to school to be a TV repairman. And so he wasn’t really sure, he’s like, “Are you sure you really want to do this? Now I could just buy another TV or another computer if it breaks.” And to this day, I have to explain to people, I really don’t know how to fix computer. If this laptop broke right now, I’d just have to do the same thing my parents do and just go get another one, I have no idea.

Capture The Flag

Jason: But I had an option to do a Master’s, PhD hybrid or basically do it all in one shot. And after two years, if I wanted to call it quits, then I would get the Master’s degree. Yeah, at the time I thought I will just do the Master’s, I didn’t really plan on getting a PhD. But actually the very last class that I took in my Master’s was a class called neuro evolution, which was all about trying to solve problems through neural networks and through evolutionary computation.

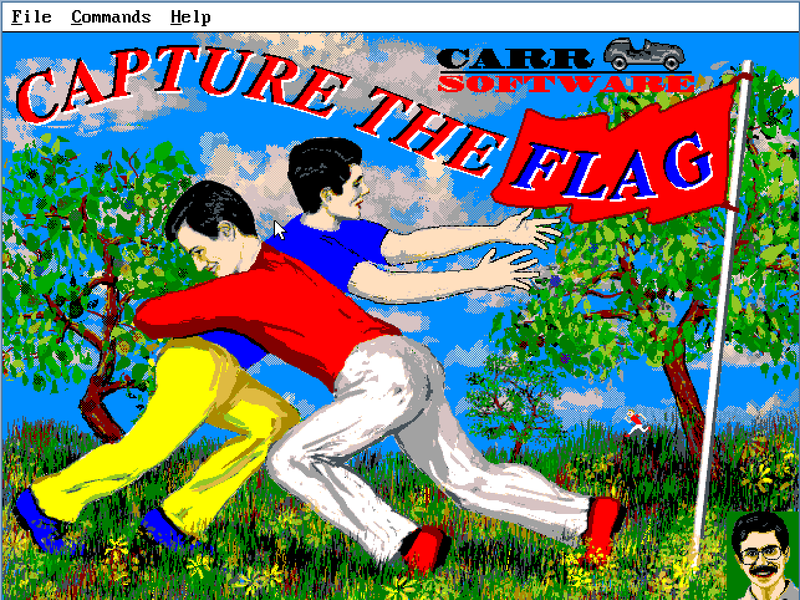

So America Online had this capture the flag game for free. And I remember I downloaded it on a 56K modem, it took forever. And it was basically like a turn-based capture the flag where you played as one person, and there was a friendly AI for the other three players, and then there was four player enemy AI, and you’re trying to capture the flag. And if the enemy touched you, you’re in jail, but the friendly AI could bail you out of jail.

Adam: I think I played this. Do you get to see more and more of the ground as you travel?

Jason: Yeah, that’s right. Yeah. Yeah. Do you remember the name of it?

Adam: So the game is called Capture the Flag. If you’ve not played it, you view a large field with trees in it from overhead, and you can only see where your players have been, there’s a fog of war like in StarCraft. Except it’s turn-based, you move a certain number of moves and then your players freeze there, and the computer gets to take its turn and move its players.

What Is a Neural Net?

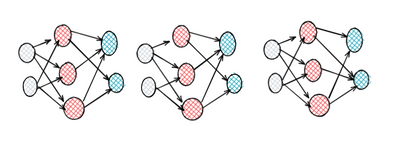

Jason: But for my neuro evolution course, my final project, I recreated this game, capture the flag. And then I built an AI for it using neuro evolution. And so just to unpack that, neural networks are effectively like function approximators that are inspired by the way the brain works. And so if you imagine graphing a function on your calculator, I’m sure everyone’s done this on their TI 85. You can punch in Y equals X squared and it’ll draw a little parabola on your TI 85 or whatever the calculator is nowadays. And so what a neural network will do is it will look at a lot of data and it can represent almost any function.

Adam: So if it’s your original graph thing, it’s like telling it X is two, Y is three. You’re feeding it all these pairs.

Jason: Exactly. Yep.

Adam: Memorizes them.

Jason: Yep. But because there’s contradictions and there’s noise in the data and all of that, you won’t tell it exactly, force it to be Y is three when X is three. But it’s a hint. You say, “Hey, when X is three, Y’s probably three.” So if you’re not there, get a little bit closer to there. And you do this over and over again for so many different Xs that you end up with some shape that won’t pass through every point, it’s usually impossible, but it will get close to a lot of the points.

Where It Fails

Adam: This is basically back propagation. It’s a form of supervised learning. You’re training the neural net by supervising it and telling it when it gets the wrong answer, what it should have gotten instead. And to do this, you need to know what the right answer is so that you can train it.

Jason: And so that works great to when you have a person going and telling you the perfect answer or the right answer. But for puzzles and games, for example, you don’t have that. So look at Go, to this day, people haven’t found the perfect Go game, a Go game for people who are playing perfectly. And so you don’t have that. And so you have to do something different, you have to learn from experience. So you just say, “Look, this Go game, that’s a really good move. That’s better than any move we’ve ever seen at this point in the game.” It doesn’t mean it’s the best, it doesn’t mean that your goal should be to always make that move, but it’s really good.

A simple way to do that is have a neural network and have it play a lot of Go, and then make a subtle change to it, and have it play a lot of Go again. And then say, “Okay, did that change make this player win more games?”

If it did, then you keep the change. And if it didn’t, then you throw it away. And so if you do this enough times, you will end up in what we call a local optimum. In other words, you’re making these small changes, you’re picking all the changes that make their Go player better, and eventually you just can’t find a small change that makes the player better. And so you could think of evolutionary computation at a high level as doing something like that, but it’s doing it a really large scale.

So maybe you have a thousand small changes and 500 of them make the player better. And you can adapt all 500 of those different players and the existing players, you can take all 501 of those players and make a player that’s step-wise, that’s better in a big way. And you would just keep doing that.

Adam: So this is what Jason learned in his neuro evolution class. He would create all these generations of players, which had random changes, and like evolution, have them play capture the flags against each other, slowly breeding better and better players.

Jason Watches His Creation

Adam: Was there a moment where you tested out your algorithm? Did you try to play it and capture the flag?

Jason: Yeah, the real aha moment was, having this God’s eye view without the fog of war, because I was just an observer, and watching the AI. And specifically watching this almost like Wolfpack behavior, where three players would surround a player and trap them. Just seeing that thing that you’ve seen in nature just emerge organically, that to me was amazing.

That was unbelievable.

When I saw all the players converge and capture and do this methodical thing and then take the flag. And even, I think at one point two of them had been captured, and so the other two just decided to go for the flag and just forget about any strategy and just go for broke.

Adam: Did you watch it and anthropomorphize? Did you cheer for one team?

Jason: Yeah. Yeah. Yeah, I did. Naturally, you don’t want to cheer for the underdog. So yeah, you would see this scenario play out where they would chase after one person, even though there was four of them and only two of the other team, they would chase after one and the other one would get the flag.

Adam: I didn’t follow the strategy. One runs, and then…

Jason: Yeah. So one would run and the other four would all chase after that one. And then the second one would go and get the flag and win.

Adam: It’s like a decoy.

Jason: Yeah, but it would only happen when the AI was this advantaged. So the way it worked was there’s four players, so there’s a bunch of sensory information that was just repeated four times to make the input of the network. And I guess even though it’s playing against itself, it learned that when two of those inputs are completely shut off, which is what happened when they were captured, to then execute this hail Mary strategy. And yeah, it was just super fun to watch that play out. And I would remember just sitting in the lab cheering for this one person and they would try to come back. In your head, it was hard to know, because it was a big grid, can they get back quick enough to catch this person? So it’d be pretty suspenseful.

And just seeing all of that, just all encoded in this network, neural, excitation back prop and all these things for understanding what a neural network is doing, all this stuff hadn’t been invented yet. So it was just a black box and it was just magic. You would run it on the university cluster, who knows what it would do, you would get it back a few days later and you would just see all this amazing emergent behavior. That to me just really lit the spark.

And so I had already accepted a job with the intention of just getting a Master’s and leaving because I just didn’t see anything that inspired me. But right there at the 11th hour, I took this course. And I said, “This is amazing.” The fact that it actually worked and it exploited things that I would’ve never thought of, that really is what lit the spark.

The Disappointed Advisor and The PHD Fellowship

Adam: So based on this cool capture the flag experience, Jason decides to do his PhD and he gets a fellowship.

Jason: So the entire time since we’ve been born all the way through to when I was doing my PhD, we were in this neural network winter, where people had given up on this idea of functional approximation.

This fellowship came from the natural language processing faculty members. So you have to have been nominated for the presidential fellowship, and I was nominated by Dr. Gomez who worked in natural language processing. He really vouched for me. When he found out that I wanted to do neuroevolution, yeah, he was extremely disappointed. He was particularly disappointed that I wanted to do this area that had no future. I remember him saying things like, “Basically there’s no knowledge in the neural network, it’s just a function.” That’s effectively what his argument was. And yeah, he was a total unbeliever.

Adam: So know that neural networks would have a resurgence, especially in supervised learning. But Jason had no way to know that, he just knew that he was seeing really amazing results, and that he was having a lot of fun.

Jason: So I actually worked full time through the entire part of the PhD starting from the thesis. And so that was a wild experience. So I would wake up early in the morning, I would take a look at what evolved last night in the cluster. If it didn’t crash or anything, I would either monitor it, or if it was finished, I would look at some results. I’d play a little bit of Counter-Strike at 8:00 am.

Off To Google

Adam: This is around 2009. And Jason ended up developing a method called hyper need for his thesis.

Jason: So I graduated college, I worked an extra year and then I ended up going to Google. I had a few friends who were at Google and they said, “Hey, you have to come here, this is amazing. It’s like Hunger Games for nerds. There’re tons of nerds out there.” I’m like, “Okay, this sounds right up my alley.” I did the interview, I was like, “Oh, this is paradise. There’s a real chance to focus.” I met tons of really smart people, I thought, “This is absolutely amazing.”

Youtube Recommendations and TensorFlow

Jason: But after about a year of that, that’s when Andrew Ng joined Google and that’s when deep learning really started to take off. I reached out to him and reached out to other people that were in the research wing of Google, and I said, “I have this experience. I love neural nets.” I said, “I want to study this with you guys and make progress.”

So I ended up transferring to research. So then when the deep learning revolution really hit, I was working on a lot of that stuff. So I was working really closely with the Google brain team. And I wrote a chunk of what ended up becoming TensorFlow later. I’m sure someone else rewrote it, but I wrote a really early version of parts of TensorFlow. The team I was on built the YouTube recommendations algorithm. So figuring out, when you watch YouTube video and on the right hand side, you get those recommended videos, figure out what to put there.

This Is a Reinforcement Learning Problem

Jason: And the whole time I thought, “Wow, this is all really reinforcement learning, and decision-making.” We’re putting things on the screen and we’re using supervised learning to solve a problem that isn’t really a supervised learning problem.

Adam: Traditional recommender systems try to learn what you like from your history. Amazon knows that people with my shopping history would like certain books, so it ranks them by probability and shows me the top five or something like that.

But what if recommendations were more like a game, more like capture the flag? The computer player shows me recommendations and its goal is to show me things that I’ll end up buying. So it’ll probably show me the top probability items, but occasionally it’ll throw in some other things so that it can learn more about me. If I buy those, it will know something new and it will be able to provide even better recommendations in the future.

Adam: In your capture the flag game, there’s the fog of war, there’s like things you don’t know. And it’s like, “Okay, so I’m on YouTube and you know that I like whatever computer file has all their videos.” But you don’t know about something else that I might like. So you could just try to explore that space that is me, try to throw something at me and see what happens. Is that the idea?

Jason: Yeah. Exactly right. That’s called the explore exploit dynamic. So you can exploit which means show the thing that you’re most likely to engage with. Or you could explore, you could say, “Well, maybe once per day we’ll show Adam something totally random. If he interacts with it, then we’ve learned something really profound” There’s all these other times we could have shown you that same thing that we didn’t. And so that’s really useful to know. And so yeah, that isn’t captured by taking all these signals and then just maybe adding them up and sorting. That’s not going to expose that explore exploit dynamic. To expose that dynamic, you have to occasionally pick an item that has a really low score.

Jason Moves To Facebook To Solve It

Adam: In other words, these recommender systems, they need to make decisions. They’re like AI bots, like a Go player, capture the flag bot. It’s a reinforcement learning problem, but nobody’s approaching it this way. It’s not even totally clear how to do it.

Jason: So yeah, I talked to this gentleman, his name was Hussein Mahata, who’s now leading AI at Cruise. And he was a director at Facebook. And when I met Hussein, basically the gist of what he said is, “I don’t really know how to do it either, but I want you to come and figure it out.” That to me really ignited something. I felt that passion to really push state-of-the-art. And it’s something really transformational, because it is a control problem, and nobody really knew how to solve it using the technology that is designed to solve it. I just found that super appealing.

So I came to Facebook about five years ago with the intent of cracking that. Yeah, it’s been a pretty, pretty wild ride.

The Newsfeed

Adam: So was there a specific task or was it just…?

Jason: Initially, I was brought in to basically rethink the way that the ranking system works at Facebook. So for people who don’t know, when you go to Facebook, whether it’s on the app or the website, you see all these posts from your friends, but they’re not in chronological order. And actually a lot of people complain about that, but it turns out being chronological order is actually terrible and we do experiments and it’s horrible. People are in a state of… And I put myself in this category. I was in a state of unconscious ignorance about chronological order.

It sounds great, you have your position and you can always just start there and go up, you never miss a post. It fails when your cousin posts about crochet 14 times a day. And everyone has that one friend. And you just don’t realize it because the posts aren’t in chronological order. So they thought, “Well, we could use reinforcement learning to fix all of these cold start problems and all these other challenges that we’re having.” Clickbait, all these things can be fixed in a very elegant way if you take a control theoretic approach, versus if you’re doing it by hand, it gets really complicated.

Adam: This is the recommender thing all over again. If Facebook can explore what your preferences are, it can learn more about you and give you a more valuable newsfeed. So Jason joins Facebook and he spins up a small team, but things are a bigger challenge than he thought they would be.

Struggling at Facebook

Jason: So it’s interesting. It started off as me with a few contractors, so short term employees. And these were actually extremely talented people in reinforcement learning, but they worked for a consulting company, think of it as a Deloitte or McKinsey or that type of thing. And so they had no intention of being full-time at Facebook or anything like that. And we worked on it and we just couldn’t really get it to work. And so after their contract expired, we didn’t renew it. And I was a little bit lost because I wasn’t really sure how to get it to work either, but I kept working on it on my own.

It was a really odd time because I was starting to feel more and more guilty, because I came in as a person with all these years of experience from these prior companies, and I was contributing zero back to the top line or the bottom line or any line. I was just spending the company’s money. People joke about how nice it would be to be a lazy writer. You hear this stereotype of the rest invest. So you heard about this in the nineties, people on Microsoft who had these giant stock grants that exploded and they would just sit there and play Solitaire until their stock expired or whatever. I realized being a lazy writer, actually it’s terrible. You really need to have a Wally mentality, from Dilbert. And in my case, I wasn’t lazy, I was working super hard, but I was a writer in the sense that I was being funded by an engine that I wasn’t able to contribute to.

And it felt terrible. Even I didn’t get good ratings and all of it was really tough. And I actually, at one point had a heart to heart with my wife and I was thinking, “Should I quit this? Or should I keep trying to do it?” I really thought that it was going to work the whole time. I was really convinced that it would work. And so what I decided to do is I decided to ask for permission to open source it. And the reason was I felt like if they fired me, I could keep working on it after I was fired. So they were totally fine open sourcing it, my director, it wasn’t really on his radar. So he just said approved. And so it got open sourced.

Adam: Because it wasn’t contributing to any bottom line, so they were like, “What’s the big deal?”

Jason: Yeah, it was totally below the radar. So it’s just the way someone would approve a meal reimbursement or something. It’s just the word approved, and then all of the code could go out and GitHub.

ReAgent.ai

Adam: This project is on GitHub right now and at reagent.ai. In retrospect, it seems like the project might’ve been failing because Jason was targeting the wrong team.

Jason: There’s this interesting catch 22 where the teams that are really important are also almost always under the gun. And it’s very hard for them to have real freedom to pursue something. And so you end up with a lot of the contrarian people end up on fringe teams. And so there was a gentleman, his name was Shu. Shu was on this team that was pretty out on the fringe, they notifying people who are owners of pages. So for people that aren’t familiar with Facebook, there’s pages on Facebook, which are like storefronts. So there’s a McDonald’s page and there’s a person or a team of people who own that page. They can have editorial rights and stuff like that. And so these were notifications going out to people who own pages basically informing them of their page. A page like McDonald’s, they’re things changing all the time. So if you were to just notify everyone about everything, it would just blow up their phone.

Facebook Page Notifications

Adam: What type of notifications would I get? What’s going on with my page?

Jason: You have a lot more likes this week than usual or fewer than usual. Yeah. There’s 13 people who want to join your group that you have to approve or not. So it’s the same team that does groups and pages, these kinds of things.

Adam: Yeah. These are all things that theoretically somebody wants to get, but if their page is busy, it’s just way too much information.

Jason: Yeah, exactly. And on the flip side, if their page is totally dead and one person joins maybe every two months, it’s probably just annoying to send it to them. So yeah, part of the challenge there is coming up with that metric of, “What value are we actually providing? And how do you measure that without doing a survey or something like that?” And so they were using decision trees to figure out the probability that somebody would tap on the notification. And if that probability was high enough, they would show it. But at the end of the day, they don’t want people to just tap on notifications. What they really wanted was to provide value. And so we could look at things like, “Is your page growing? Are you taking care of people who want to join your group? Are you actually going through?” If we sent you a notification, then are you going to go and approve or reject these folks? And if we don’t send you the notification, are you not going to? Because if you’re going to do it anyways at four o’clock and we notify you at 3:00

Adam: Because they would just end up optimizing for the message you’d be most likely to click on. That’s not really what they care about, right, just that you’re tapping these things?

Jason: Exactly. Yeah, exactly. A lot of us don’t like getting notifications for things that we would have either done anyways or have no interest in. We wanted a better mouse trap. Facebook is actually not in the business of just always sending people notifications. They do have social scientists and other people who are trying to come up with real value and measuring that objectively.

Putting ReAgent Into Action

Adam: It’s not the newsfeed, but Jason has found a team who’s willing to try his reinforcement learning approach. Here, the action is binary, send the message or don’t. So I have a page for this podcast, CoRecursive, and I do nothing with it. And then, so your reagent, it gets some set of data, like, “Here’s stuff about Adam and how he doesn’t care about this page.” And then, “Okay, we have this notification, what should we do?” Is that the type of problem?

Jason: Yeah, pretty much. Imagine an assembly line of those situations. So there’re billions of people and we have this assembly line and it just has a context. Person hasn’t visited their page in 10 days, here’s a whole bunch of other context, here’s how often they approve requests to join the group, et cetera. And then we have to press the yes button or the no button. And so yeah, it’s flying through at an enormous rate. Billions and billions of these are going through this line and we’re ejecting most of them, but we let the ones through that we think will provide that value. And so what we’re doing is we’re looking at how much value are you getting out of your page if we don’t send the notification? How much value are you getting out of your page if we do?

And then that gap, when that gap is large enough, then we’ll send it.

Offline Reinforcement

Jason: One area which is our niche is that we do this offline. So there’s plenty of amazing reinforcement learning libraries that work either with a simulator or they’re meant to be real time, like the robot is learning while it’s moving around. But in our case, you give us millions of experiences, and then we will look at all of them at once and then train a model. And then you can use this model for the next million experiences. Just to explain through an absurd example, let’s say you had a Go playing AI, so something AlphaGo would do. And let’s say, at any given time, if you were to just stop training, there’s a 99% chance you’d have a great player and a 1% chance you’d have a terrible player.

Adam: Yeah.

Jason: Well, that’s not a really big deal for them, because they’ll just stop if it’s bad, they’ll stop playing. Or they could just train two in parallel or something. And now you have, what is it one over 10,000 chance of having a bad player? But for us, we can’t do that. If we stop and then it’s a bad per player, that player goes out to a billion people and it’s going to be a whole day like that. And so a lot of academics haven’t thought about that specific problem. And that’s something that our library does really well.

Adam: I also assume AlphaGo can play against itself. You don’t have an Adam with a page up their to manage.

Jason: Yep.

Adam: You have to learn actively against the real world.

Jason: Yeah, that’s right. We have to learn from our mistakes. There is no self play, there’s no Facebook simulator or anything like that. What AlphaGo does is it just does reinforcement learning. So it’s just constantly trying to make what it thinks is the best move and learn from that. What we do is we start from scratch and we say, “Whatever generated this data, can I copy their strategy?” Even if it’s a model that we put out yesterday, we still take the same approach, which is, can I at least copy this and be confident that I’m going to make the same decision given the same context? Once we have that, then we’re safe. We say, “Okay, I am 99.9% confident that this system is equivalent to the one that generated the data. I could ship it.” Then we’ll start reinforcement learning. And then when we do enforcement learning, it’s going to start making decisions where we don’t really know what’s going to happen.

And as we train and train and train, it will deviate from what we call the production policy, whatever generated the data, it’s going to deviate more and more from that. And the whole time it’s deviating, we’re measuring that deviation. At some point we can stop training and say, “I don’t know with a degree of certainty that this model isn’t worse than what we already have out there. I’m only 99% sure that this model isn’t worse, and so now I’m going to send it out there.” Or it could be 99.9, whatever threshold we pick. The more confident you want to be that the model isn’t worse, the less it’s able to change from whatever’s out there right now. And so you have these two loops now, you have the training loop, and then you have this second loop, which is show the model to the real world, get new data, and then repeat that. Because of that, that second loop takes an entire day to do one revolution, we have models that we launched a year ago that are still getting better.

Adam: Instead of capture the flags, this sounds more like simultaneous chess. The AI is playing chess against billions of people each day, and then at night it analyzes its games and comes up with improved strategies and things it might try out next time. Actually, this makes me think about the documentary about AI.

The Social Dilemma

Adam: Something that comes to mind with all this, I don’t know whether you want to answer this or not. What do you think of the movie The Social Dilemma? It seems very relevant what we’re talking about.

Jason: Yeah. I haven’t seen it, but I’ve heard a lot of critiques. And I know some of the folks who are in the movie and I think there’s a lot of truth to it. And again, I haven’t seen it, so take this with a grain of salt. But one thing that I noticed is missing, at least from a lot of these critiques, is there’s this assumption of guilt in terms of the intent. There’s this idea that we have this capitalist engine and it’s just maximizing revenue all the time. And so because engagement tries revenue, then you’re maximizing engagement all the time. The reality is it’s not true. In my experience, there isn’t that much pressure on companies like Facebook and Google and Apple. There’s pressure because there’re things we want to do in everything, but there isn’t this capitalist pressure on Facebook. It’s not like the airline industry where they have razor-thin margins.

I do think that we’re trying to find that way to really increase the value, to provide real value to people. And I think we do that. The vast majority of Facebook, if you were to troll through the data, it’s basically people just acknowledging each other. The vast majority of comments are congratulations or something like that. That’s probably the number one comment, once you adjust for language and everything. And that is really what we’re trying to optimize for, are those good experiences. I don’t work on the social science part of it. We try to optimize and we do it on good faith that the goals we’re optimizing for are good faith goals. But I’ve been in enough of the meetings to see the intent is really a good intent. It’s just a thing that’s very difficult to quantify.

But I do think that the intent is to provide that value. And I do think that they would trade some of the margin for the value in a heartbeat. Yeah. With all that said, I think it’s important to keep tabs of how much time you spend on your phone, and just look at it and be honest with yourself. And this is true of everything. I’m not a TV watcher, but if I was, I would do the same thing there. And I catch myself, I get really into a video game, and next thing you know, I realize I’m spending three, four hours a day on this video game. And you could do the same thing with Facebook and everything else. So it’s good to have discipline there. And it’s a massive machine-learning engine that is doing everything it can to optimize whatever you try to optimize. So that part of the social dilemma is true, I just think the intent is a little bit misconstrued there. That’s my personal take on it.

Adam: I think social media is like fast food. McDonald’s fries are super delicious, best fast food French fries. But if that’s your only source of food, or if social media is your only form of social interaction, then that’s going to be a problem. But I’m not sure we need a moral panic. Anyways, let’s find out how the notifications project worked out.

Notification Success

Jason: Yeah. So our goal going into it was to reduce the number of notifications while still keeping the same value. So there was a measure of how much value we were providing to people, as I said, based on how they interact with the website. And we reduced notifications something like 20 or 30%, and we actually caused the value to increase slightly. And so people were really excited by that.

Adam: So did your performance reviews get better?

Jason: Yeah. So just to take this timeline to its conclusion, so then we ended up having more and more success in this area of, I think it’s technically called re-engagement marketing. Basically, you have someone who’s already a big fan of Toyota, when do you actually send them that email saying, “Hey, maybe you should buy another Toyota our trade in or something”? Even though our goal is actually not to drive engagement, it’s really just to send fewer notifications. But at the end of the day, the thing that we want to preserve is that value. And so we found that niche and we just started democratizing that tech. And then at some point it became just too much for me to do by myself. So I didn’t get fired, I’m still at Facebook. They haven’t fired me yet. There’s that saying, you get hired for what you know, but fired for who you are. I think I put that one to the test. But yeah, the performance reviews got better, and I switched to managing, which has been itself a really, really interesting experience.

Adam: Jason succeeded, he got people to see that these problems were reinforcement problems, and he got them to use his project. It’s been at least five years since he joined Facebook, but the newsfeed team is starting to use Reagent. And yeah, it’s open source, so check it out. Reading between the lines. It seems like this whole thing took its toll on Jason.

The Mental Toll of the Journey

Jason: The thing I realized is that for me at least, I reached the finish line. I always joke with people that this is the last big company I’m ever got to work for. And I reached that finish line, and when you do that, you could try and find the next finish line or you could turn back around and help the next person in line. And being a manager is my way to do that. I’m still super passionate about the area, I’m not checked out or anything like that, but I’m done in terms of the career race. I’ve hit my finish line, and so let me turn back around and just try and help as many people as I can over the wall.

Adam: So that was the show. I’m going to try something new. If you want to learn a little bit more about Jason and about Reagent, go to corecursive.com/reinforcement.

I’m going to put together a PDF with some things covered in this episode. I haven’t done it yet, so you’ll have to bear with me. Jason can be found on Twitter at neuralnets4life, that’s the number four. He’s very dedicated to neural nets. And he also has a podcast, which is great, and I’ll let him describe.

Programming Throwdown

Jason: If you enjoy hearing my voice, you could check out our podcast. We don’t talk that much about AI, but I have a podcast that I co-host with a friend of mine that I’ve known for a really long time, a podcast called Programming Throwdown. And we talk about all sorts of different programming topics, everything from languages, frameworks. And we’ve had Adam on the show a couple of times, it’s been really amazing. We’ve had some really phenomenal episodes. We talked about working from home together on an episode. So you can check me out there as well.

Adam: Thank you to Jason for being such a great guest and sharing so much. And until next time, thank you so much for listening.

If you want to learn more about Jason and find out about new episodes, sign up for the newsletter